This is an old post from my old blog. It belongs here.

How many accounts do you have?

Do you access these services on-line for bill paying and general management and tracking purposes? What about other on-line accounts?

i have so many accounts to keep track of, with different on-line URLs and different user name and password combinations, that i cannot remember more than a couple of them. My web browsers automatically fill in the fields for me. When that fails, i have a note with each account and its specific details and passwords on my Tapwave Zodiac (my choice of PDA, still, despite Tapwave ceasing to exist).

Yes, not using the information from my head makes it easier to forget. Maybe you would do better, if you didn't grow up with learning disabilities... but would you be able to remember 46 accounts and their associated passwords and IDs? That's how many i currently track on my Zodiac.

Points of interest:

At least 65% of my accounts demand a password format which my favourite standby password fails to accommodate. About 50% of those accounts will not allow me to make the same generic modification to my standby password. Still other accounts require me to regularly enter personal information, just so they can be sure it's really me. (don't forget your dead parrot's middle name, kids)

One company, astoundingly, and quite opposed to the beliefs of super human security pundits, limits the user's log-in name to eight characters. This is because they are still living in the past. The 8-bit past. The *nix past. The real point, though, is that this leaves me with one single account that does not accept my first initial and last name, nor my email address, in completeness as a user ID (the two most common account name types for a person to use, based on current web standards that none of us voted for).

So, let me get this straight... in order to have "respectable" security in place with your accounts (unless you're using a card swiping mechanism for an ATM which only demands FOUR digit "PINs")... You have two options:

To those same pundits, admins and geeks, i go so far as to declare (not suggest, but DECLARE):

The goal: Secure Computing.

Back in the day, you know, when everything was limited to 8 characters (and PINs of four numbers were not marks of shame) there were no raging disputes about these things. Mostly because:

Yes, we must make it all secure, now. It didn't matter before (except to some crazy wingnuts playing with something called the "CLI" and something called "ARPANET" on some archaic computer systems created in the 60's), but there is nothing more important today than security. Ask anyone who recently moved to Windows Vista and they will tell you just how much they like the new security features of their computer's latest operating system. Yes, yes, it's all about SECURITY!

(Oh, and privacy. How many dead trees do your service providers mail to you and make you read at the office, defining their privacy policies, again and again... and again, despite the fact that pretty much nothing has changed since the laws they must follow were established in the first place. It's all about covering corporate butt. Take a look at my recent set of articles on flickr and ask me how many of those complaints are "covered" by flickr's claim of protecting users' privacy... not MY privacy, per se... just... users in general)

Hell, screw reason, sensibility and rationality. Screw the human beings trying to use these systems!

If you are actually able to get to your data, it's just not secure enough!

Full Disclosure:

i'm a former computer geek. Or so said the flame to which- i mean -the standards to which i was held way back in middle school. i was a computer geek when it was uncool and could get you a punch in the gut, just for fun. Today, thanks to people at advertising agencies working for Apple and several other technology companies who are constantly desperate to widen their market and user-base, computers and geekery is somehow "cool."

Sorry.

"Kool."

Now that it's kool to be a computer geek and "hot" girls wear tiny t-shirts with "i love nerds" on them... i've given it all up (as much as i can, given that i cannot hire my own technical support geeknerd to fix things for me while i go outside and enjoy the sunshine).

i worked in "the industry" for almost two decades (almost). i did pretty much a little of everything at one point or another. Programming, customer service, technical support, network management, etc. (not that the network managers i was filling in for would admit that, as i have no magical certificate that declares me a "specialist").

i even crusaded (rather intensely) for an "alternative operating system" called BeOS. i briefly crusaded for Haiku. During my BeOS/Haiku crusades, i started to recognize that computers are really just junk, made by geeks, for geeks. The attitude of most programmers (not all) and companies (not all) was "RTFM." (wiki that one)

i discovered that this was not at all about making good stuff that would solve problems and make life easier for everyone. It was an elite club and normal people were not allowed (but they were expected to buy the stuff and shut up when it didn't work, because it must be the user's fault).

The computer industry used to be a fascination to me, but now i just want the tools to do what it says on the tin. If it's broke, out of the box, i shouldn't have to fix it. It should have a warranty. Not a statement in the "End User License Agreement" (which you never read, let alone agree to) saying "The entire risk of the purchase is on you. No warranty is expressed or implied, including fitness for a particular purpose."

i call myself a "born again USER." i used to have a career making the lives of other users easier when they came to me saying "I just don't get this computer stuff." i loved to tell them that it wasn't their fault.

This article is probably like walking through a room full of ex-cons with all of my personal information printed, legibly, on my t-shirt, while giving them the finger(s) and calling their mums whores. But, you know what?

A computer is supposed to be a tool, people.

Make it work,

use it,

and

GET OVER IT!

How many accounts do you have?

- Banks (savings, checking, ATM, etc.)

- Credit cards (more than one? More than four?)

- Car care clubs

- Insurance companies (multiple?)

- Mortgage(s)

- Maintenance service contracts (car, home, appliances, etc)

- Doctors (yours, your kids' and your pets')...

Do you access these services on-line for bill paying and general management and tracking purposes? What about other on-line accounts?

- Special interest forums.

- Registration and licensing accounts (for multiple product vendors).

- Technical support (for multiple product vendors).

i have so many accounts to keep track of, with different on-line URLs and different user name and password combinations, that i cannot remember more than a couple of them. My web browsers automatically fill in the fields for me. When that fails, i have a note with each account and its specific details and passwords on my Tapwave Zodiac (my choice of PDA, still, despite Tapwave ceasing to exist).

Yes, not using the information from my head makes it easier to forget. Maybe you would do better, if you didn't grow up with learning disabilities... but would you be able to remember 46 accounts and their associated passwords and IDs? That's how many i currently track on my Zodiac.

Points of interest:

- Security pundits of the world will tell you not to use the same password for every account. Apparently these pundits are of super-human ability, able to remember more than 7 (plus or minus two) unique identifiers and which accounts they go to. i may have some autistic genius traits, but keeping track of 46 accounts with only my brain is far and away beyond my abilities. Maybe if the information was meaningful to me, but...

- There is a set of determining factors devised to inform users of the strength of their password. It usually goes by number of characters used, mixed case (yes, capitals and lower case letters are DIFFERENT things in computer land, folks... this is called "case sensitivity"), use of numeric and special characters.

- Those super human security pundits recommend 8 characters or more... with mixed case... and numbers... and, yeah, don't forget the special characters, too.

- Security pundits also tell you not to use a real word that exists in a dictionary, especially in your native language, or to intermix numbers and special characters among the letters of a word, at the very least.

- Certainly you should never use a word that people who know anything about you might be able to guess, even if THAT word is not in an official dictionary.

- The real recommendation is that you use nonsense passwords...

- With mixed case letters

- And numbers

- And special characters

- At least 8 characters, but "more is better"...

- Hell, you better make that 14 characters, just to be safe.

- And never, ever use the same password more than once.

At least 65% of my accounts demand a password format which my favourite standby password fails to accommodate. About 50% of those accounts will not allow me to make the same generic modification to my standby password. Still other accounts require me to regularly enter personal information, just so they can be sure it's really me. (don't forget your dead parrot's middle name, kids)

One company, astoundingly, and quite opposed to the beliefs of super human security pundits, limits the user's log-in name to eight characters. This is because they are still living in the past. The 8-bit past. The *nix past. The real point, though, is that this leaves me with one single account that does not accept my first initial and last name, nor my email address, in completeness as a user ID (the two most common account name types for a person to use, based on current web standards that none of us voted for).

So, let me get this straight... in order to have "respectable" security in place with your accounts (unless you're using a card swiping mechanism for an ATM which only demands FOUR digit "PINs")... You have two options:

- Be super human

- Write them all down somewhere

To those same pundits, admins and geeks, i go so far as to declare (not suggest, but DECLARE):

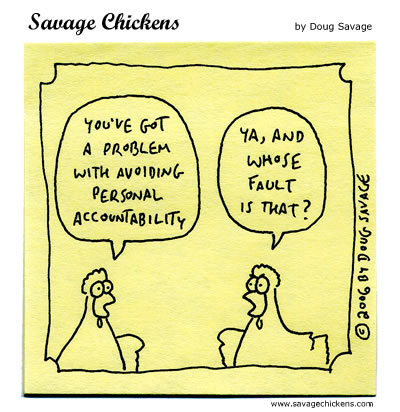

Your security demands CAUSE security breaches by REQUIRING human beings to write things down!!

Where is humane computing?

Back in the day, you know, when everything was limited to 8 characters (and PINs of four numbers were not marks of shame) there were no raging disputes about these things. Mostly because:

- The systems were few and far between

- The technology did not exist for 256-bit encryption of 14-character passwords with mixed content

- No one had yet decided to sponge their fortune from "clients" who needed "Security Advisors," or other such titles, to solve a problem not yet invented

- "Hackers" were programmers and "Crackers" were busting copy protection on 8-bit games.

- People didn't really care (largely because of points one and three).

Yes, we must make it all secure, now. It didn't matter before (except to some crazy wingnuts playing with something called the "CLI" and something called "ARPANET" on some archaic computer systems created in the 60's), but there is nothing more important today than security. Ask anyone who recently moved to Windows Vista and they will tell you just how much they like the new security features of their computer's latest operating system. Yes, yes, it's all about SECURITY!

(Oh, and privacy. How many dead trees do your service providers mail to you and make you read at the office, defining their privacy policies, again and again... and again, despite the fact that pretty much nothing has changed since the laws they must follow were established in the first place. It's all about covering corporate butt. Take a look at my recent set of articles on flickr and ask me how many of those complaints are "covered" by flickr's claim of protecting users' privacy... not MY privacy, per se... just... users in general)

Hell, screw reason, sensibility and rationality. Screw the human beings trying to use these systems!

- It's not about you.

- It's not about service.

- It's all about "SECURITY!"

- It's all about "PRIVACY!"

- It's all about Covering Corporate Ass! (and making money doing it)

If you are actually able to get to your data, it's just not secure enough!

Full Disclosure:

i'm a former computer geek. Or so said the flame to which- i mean -the standards to which i was held way back in middle school. i was a computer geek when it was uncool and could get you a punch in the gut, just for fun. Today, thanks to people at advertising agencies working for Apple and several other technology companies who are constantly desperate to widen their market and user-base, computers and geekery is somehow "cool."

Sorry.

"Kool."

Now that it's kool to be a computer geek and "hot" girls wear tiny t-shirts with "i love nerds" on them... i've given it all up (as much as i can, given that i cannot hire my own technical support geeknerd to fix things for me while i go outside and enjoy the sunshine).

i worked in "the industry" for almost two decades (almost). i did pretty much a little of everything at one point or another. Programming, customer service, technical support, network management, etc. (not that the network managers i was filling in for would admit that, as i have no magical certificate that declares me a "specialist").

i even crusaded (rather intensely) for an "alternative operating system" called BeOS. i briefly crusaded for Haiku. During my BeOS/Haiku crusades, i started to recognize that computers are really just junk, made by geeks, for geeks. The attitude of most programmers (not all) and companies (not all) was "RTFM." (wiki that one)

i discovered that this was not at all about making good stuff that would solve problems and make life easier for everyone. It was an elite club and normal people were not allowed (but they were expected to buy the stuff and shut up when it didn't work, because it must be the user's fault).

The computer industry used to be a fascination to me, but now i just want the tools to do what it says on the tin. If it's broke, out of the box, i shouldn't have to fix it. It should have a warranty. Not a statement in the "End User License Agreement" (which you never read, let alone agree to) saying "The entire risk of the purchase is on you. No warranty is expressed or implied, including fitness for a particular purpose."

i call myself a "born again USER." i used to have a career making the lives of other users easier when they came to me saying "I just don't get this computer stuff." i loved to tell them that it wasn't their fault.

This article is probably like walking through a room full of ex-cons with all of my personal information printed, legibly, on my t-shirt, while giving them the finger(s) and calling their mums whores. But, you know what?

A computer is supposed to be a tool, people.

Make it work,

use it,

and

GET OVER IT!